Turns out there's a lot of research from other industries about incidents in complex systems. Our incidents in distributed software systems conform to the models from other industries.

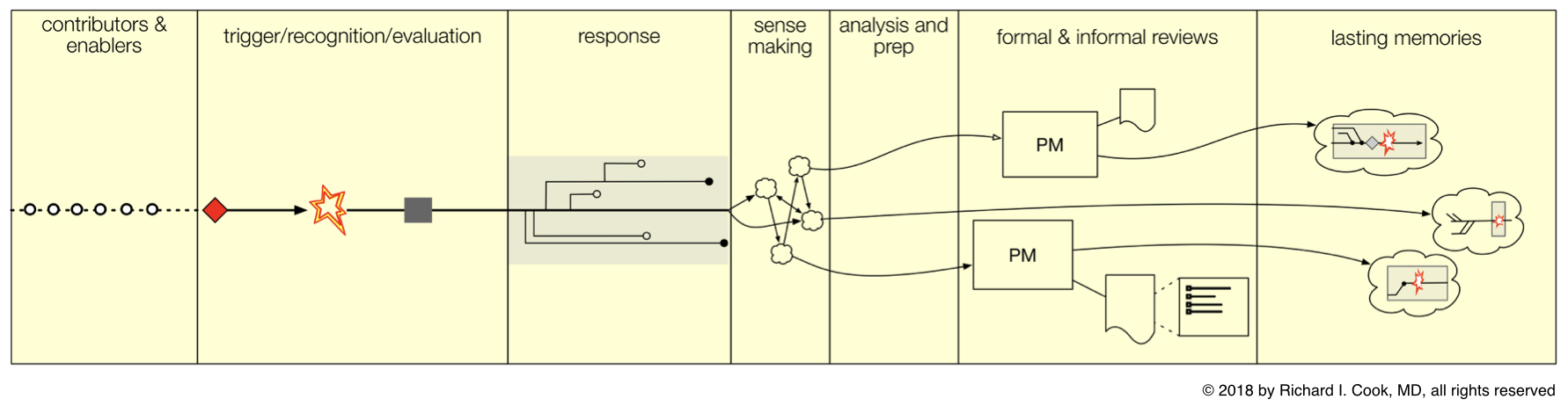

Incident sequence. Copyright 2018 Richard Cook, M.D. Used with permission.

source ![]()

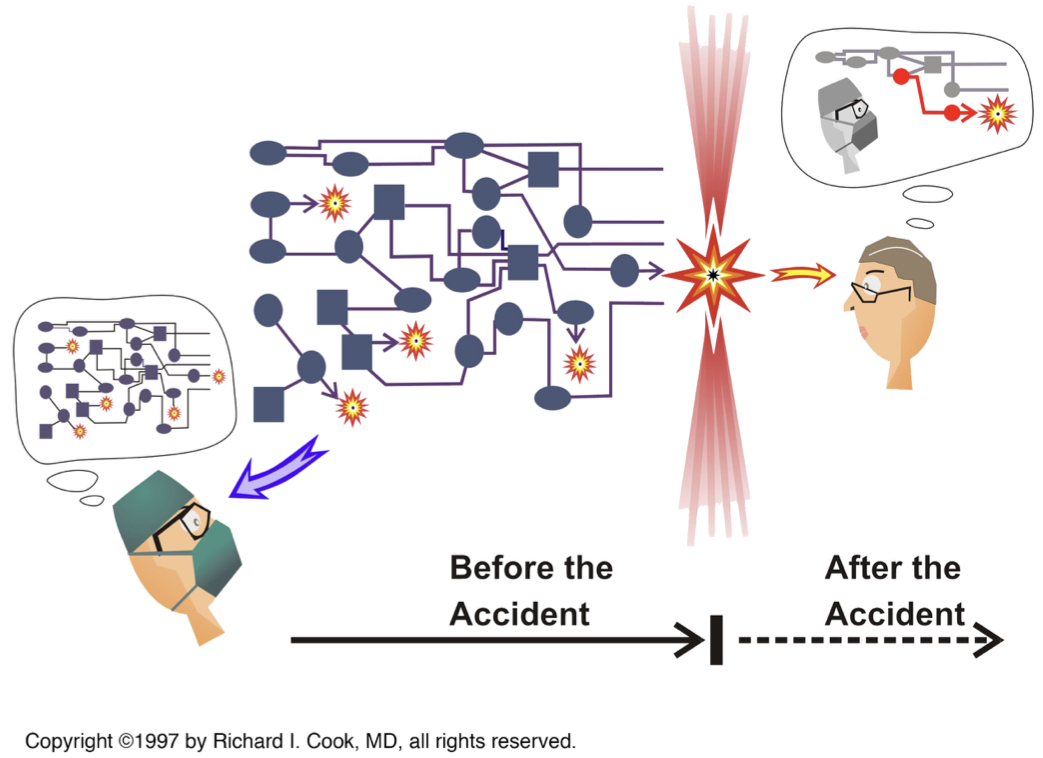

Hindsight bias. Copyright 1997 Richard Cook, M.D. Used with permission.

source ![]()

On the left side of the incident we have all the combinatorial complexity narrowed by the unique expertise of our people. On the right side of the incident we've learned something we didn't know before the incident.

.

The incident begins. We are Theseus entering the Labyrinth. Daedalus designed this maze to prevent the Minotaur's escape. Even if we succeed, how will we find our own way out? Ariadne offers us a sword and ball of twine as we enter the terrifying unknown. (this tale also published on twitter ![]() )

)

The incident is contained. We have slain the Minotaur. Our way out: trivial, even boring follow the trail of twine we have left behind. No other twist nor turn catch our attention. We even fail to notice how essential, how simple it was to mark our path with twine.

In our victory journey home, we even abandon Ariadne on Naxos, so confident in our heroism, and fail to change the color of our sails to communicate the victory to Dad. We leave the most valuable lessons behind.

For a less mythological telling: Perspectives on Human Error: Hindsight Bias and Local Rationality (1999) citeseer ![]()

"Hindsight is 2020" for ONE PATH through the maze of the incident. But it also blinds us to what made the journey actually heroic... blinds us to the critical role others played in our success... blinds us to the most mundane tools that were essential to our escape.